Inside the Algorithmic Black Box: Why Explainable AI is a Legal Necessity in 2025

Estimated Reading Time: 4 minutes

Key Takeaways

- Explainable AI is becoming crucial for legal and ethical reasons.

- Major legislative changes in 2025 will require AI transparency.

- Techniques for explainability are evolving but face limitations.

- The future will be shaped by how well AI can be explained and regulated.

Table of Contents

- The Age of Black-Box Decision Making

- Why Explainable AI Is the Hottest Tech Battleground of 2025

- How Do We Crack Open the Black Box?

- The Road Ahead: Transparency or Turmoil?

- Join the Transparency Revolution

The Age of Black-Box Decision Making

Artificial intelligence now powers everything from who gets a job interview to how medical treatments are prioritized. But the explosive rise of AI has outpaced our ability to follow its logic. Researchers estimate that over half of all AI models in the wild today are “black-box” systems—impossible for humans to interpret directly (Springer, 2024).

Why does this matter?

- Bias & Discrimination: Without insight into how AI decisions are made, hidden biases creep in unnoticed.

- Accountability: Who takes the blame when an AI makes the wrong call—engineer, organization, or the AI itself?

- Trust: Users, regulators, and even developers struggle to trust what they can’t understand. More Info

As one expert puts it, “The black-box nature of powerful algorithms is now a governance crisis, not just a technical challenge.”

Why Explainable AI Is the Hottest Tech Battleground of 2025

Regulatory Tsunami Incoming

2025 is shaping up to be a watershed for AI law:

- The EU AI Act is set to mandate “meaningful explanations” for high-risk AI decisions, making opaque models illegal in many sectors.

- U.S. Congress is debating a Digital Accountability Act to enforce transparency on consumer-facing AI.

- India, Brazil, and Japan are drafting their own versions of explainability mandates, aiming to safeguard citizens from “algorithmic invisibility.” Read More

These aren’t just policy white papers, either. Explainability will soon be enforceable, auditable, and—if you’re not ready—potentially ruinous for noncompliance.

Industry Case Studies: The Real-World Stakes

- Healthcare: When ML-driven clinical tools make diagnostic errors without explainability, patient safety is at risk—and so are hospital legal teams.

- Finance: Loan algorithms have denied services to minorities using patterns no human could catch, until regulators forced lenders to explain every “no.” Learn More

- Criminal Justice: Automated risk assessments are shown to reinforce systemic biases unless made transparent (Springer, 2024).

The bottom line: Explainable AI isn’t just about clarity—it’s about compliance, ethics, and survival.

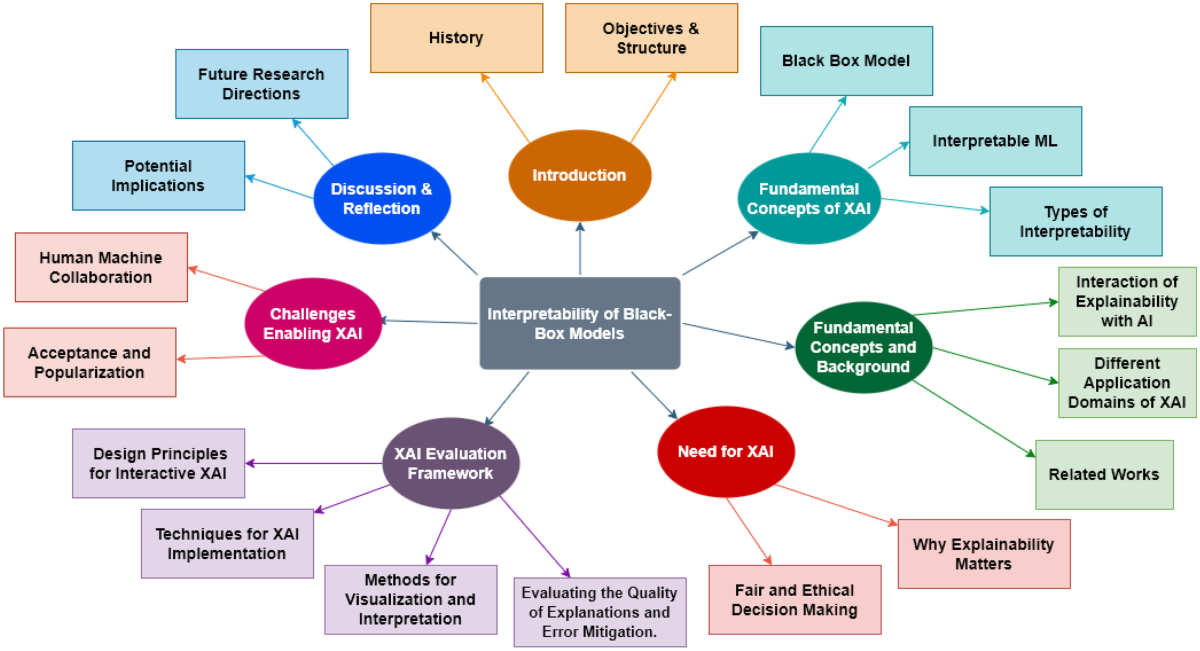

How Do We Crack Open the Black Box?

From Model to Motive: What Explainability Looks Like

“Explainable AI” (XAI) means more than just reading code. It’s about providing actionable, understandable reasons behind every AI-driven decision, even for non-experts. Leading XAI approaches include:

- Feature Importance Scores: What variables dominated the AI’s verdict?

- Counterfactuals: What would need to change for a different outcome?

- Surrogate Models: Simpler models that approximate the black-box for human review.

Crucially, real explainability means everyone—users, regulators, and affected parties—can understand and challenge AI outputs. Explore More

Can We Ever Fully Explain Deep Learning?

Here’s the hard truth: no method makes every neural net truly transparent. Some trade accuracy for clarity; others risk oversimplifying complex tradeoffs. The race is on to balance performance with legibility—before the law makes that non-negotiable.

The Road Ahead: Transparency or Turmoil?

With governments, investors, and the public demanding “show your work,” AI teams are scrambling to retrofit transparency into legacy systems. Meanwhile, startups promising “explainability as a service” are booming.

But the stakes are bigger than compliance:

- Will explainer tech uncover hidden biases—or just give companies plausible deniability?

- Can regulated transparency boost AI adoption, or will it stifle innovation?

- Who gets to decide what counts as a “reasonable explanation”—engineers, judges, or the public?

The answers will define both the winners and the fallout of the AI age.

Join the Transparency Revolution

The black box is cracking open, whether tech giants like it or not. As 2025 unfolds, explainable AI will be at the heart of every debate about fairness, responsibility, and power in the digital era.

How would your life—or your industry—change if every AI had to explain its choices? Share your perspective below or join the global #ExplainableAI conversation. The future of intelligent systems—and the people they impact—depends on it.

2 Comments

INDY 500 LIVE: How AI, Algorithms & Real-Time Data Are Revolutionizing the Iconic Race Experience - NextModlabs

May 26, 2025[…] Inside the Algorithmic Black Box: Why Explainable AI is a Legal Necessity in 2025 […]

CVS Stores Closing: How AI Is Reshaping the Future of American Pharmacies and What It Means for You - NextModlabs

May 29, 2025[…] AI is reshaping retail pharmacy by determining underperforming stores and anticipating the return of foot traffic. AI-powered chatbots are also handling routine inquiries, reducing the need for on-site staff, as explained in Inside the Algorithmic Black Box. […]